Digitally Twinning a Human

The defence industry has been exploring and adopting Modelling, Virtual Simulation and Synthetic Environments for a variety of reasons, as a service for decision support and training, for mission effectiveness, to test and investigate new technologies and technical concepts, etc. In the context of Dstl’s Serapis SSE Framework Agreement, we were tasked by Qinetiq Training and Simulation to investigate the key technologies required for a human digital twin concept demonstrator. ‘SSE’ stands for Simulation and Synthetic Environments Excellence. Given our 12-year history in 3D medical visualisation with several world firsts, this was a project right up our ally despite time constraints! The project included the full digital 3D capture of a body scan that needed to be highly precise & accurate (scanning), modelling (rigging), followed by visualisation and articulation of the model (syncing).

Basically, we had to:

Acquire a 3D body scan that was highly precise and accurate and that at least met the tolerances in the standard ISO 20685:2010.

Produce a virtual armature (a skeleton or rig) that accurately represented the person’s articulations, using motion capture, biomechanical analysis or other techniques. Produce a 3D digital representation of both the surface representation (the mesh) and the virtual armature (the rig), with the mesh and the rig being accurately related (fitted) to each other.

Enable the 3D digital representation to move in sync with the scanned individual movements, using data from wearable inertial sensors.

Hereunder an overview of the 3 tasks: scanning, rigging and syncing:

Scanning:

A research paper on the required ISO 20685:2010 standard was our start. We contacted the authors of the paper who had developed an algorithm called Gryphon which was said to be ISO compliant. We hired the body scanner used by the authors of the paper, called SizeStream SS20. Below our virtual 3D model of the scanner, including the scanned participant in action. See here on Sketchfab:

5 consecutive scans were taken with the Gryphon method. The Gryphon algorithm discards outlier measurements during this process to increase accuracy of the final results. The SizeStream software then generates a 3D point cloud for each of the 5 scans. Below the raw point cloud output of one of our scans. Note the handholds and scanner hardware are still visible.

A surface mesh is generated from the point cloud data. This surface mesh is used for all further steps in the generation of a digital twin.

We compared the surface meshes generated from the 5 consecutive scans which we overlayed in 3D in 5 different colours to check accuracy. Most of the differences are a result of the participant moving slightly in the 1-second interval between scans, however scanning artifacts are visible on the left arm of the 5th (blue) scan. These artifacts are removed as outliers by the Gryphon method when generating anthropometric landmarks and bodily measurements. You can try it for yourself here on Sketchfab. In the below surface mesh data from 5 consecutive scans, you see the following:

Large areas of a single colour signify movements of large homogenous areas of the body (such as the head and chest). Areas covered in small patches of a wide range of colours signify areas of the body that experienced little to no movement, resulting in the 5 coloured surfaces occupying the same space. This is most noticeable in the hands and feet, as these areas were anchored (via the floor and handholds) during the scan. The previously mentioned artifact on the left arm is clearly visible (in green) using this method of visualisation.

Rigging:

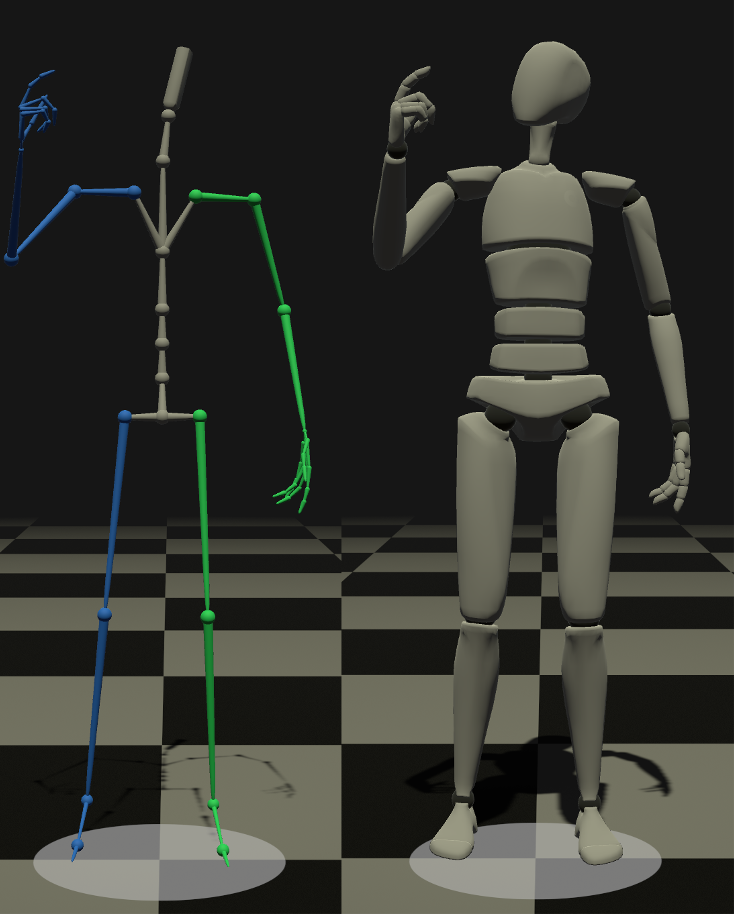

Next the 3D surface scan needed to be rigged. A skeletal rig is a digital representation of the key bones of the human skeleton. The SizeStream comes with rigging software Mixamo. Besides Mixamo, we also used DigiDoppel. When exporting from SizeStream via Mixamo, the resultant skeletal rig had 41 bones, whereas when exported from DigiDoppel, it had 52 bones.

Syncing:

We chose the Rokoko Smartsuit as the source of motion capture data to be used for the synchronisation of the Digital Twin for a variety of reasons. The skeletal rig animation data produced by Rokoko Studio can be consumed by a wide variety of commercial 3D modelling software. In our workflow, we created a software tool called HoloTwin to apply this data in real-time to the 3D Human Model data produced by DigiDoppel. Our HoloTwin software allows the final digital twin to be viewed in 3D on a 3D Light-Field display. We also validated the workflow using the open-source 3D modelling software Blender, and Autodesk’s MotionBuilder.

Conclusion:

Digitally twinning a human is possible with the technology available today as we showcased with this initial concept demonstrator within a very short timeframe and with imposed precision and accuracy requirements.

DSTL presented the result of this work at a UK Defence Surgical Research Conference in London in July 2022 to explore the potential in Defence Medicine.

Going forwards, a personalised human digital twin that uses and integrates all available personal health data could potentially help to address long waiting lists of an overwhelmed healthcare systems. At Holoxica, we’re already working towards humanising, digitising and democratising healthcare communications in natural 3D with our 3D Telemedicine platform. Technology is going to have a much greater impact in monitoring and delivering healthcare in the future. Watch this space and reach out if you want to explore more Human Digital Twin projects.